In-the-wild Facial Highlight Removal via Generative Adversarial Networks

Abstract

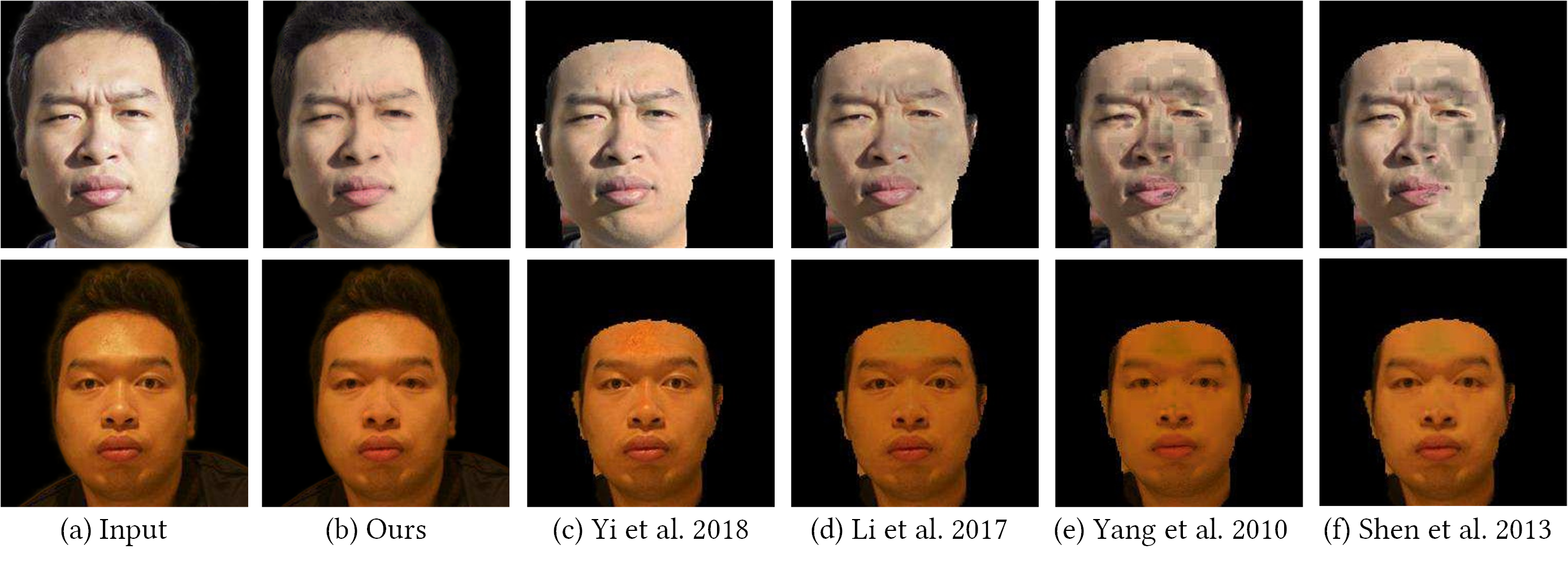

Facial highlight removal techniques aim to remove the specular highlight from facial images, which could improve image quality and facilitate tasks, e.g., face recognition and reconstruction. However, previous learning-based techniques often fail on the in-the-wild images, as their models are often trained on paired synthetic or laboratory images due to the requirement on paired training data (images with and without highlight). In contrast to these methods, we propose a highlight removal network, which is pre-trained on a synthetic dataset but finetuned on the unpaired in-the-wild images. To achieve this, we propose a highlight mask guidance training technique, which enables Generative Adversarial Networks (GANs) to utilize in-the-wild images in training a highlight removal network. We have an observation that although almost all in-the-wild images contain some highlights on some regions, small patches without highlight can still provide useful information to guide the highlight removal procedure. This motivates us to train a region-based discriminator to distinguish highlight and non-highlight for a facial image and use it to finetune the generator. From the experiments, our technique achieves high-quality results compared with the state-of-the-art highlight removal techniques, especially on the in-the-wild images.

Resources and Downloads

| Paper | Supplementary | Video |

|---|---|---|

Acknowledgements

This work was supported by the National Key R&D Program of China 2018YFA0704000, the NSFC (No.61822111, 61727808, 61671268, 62025108) and Beijing Natural Science Foundation (JQ19015, L182052). Xun Cao is the corresponding author.